- A+

在上两篇文章中已经将播放视频的功能实现了,今天我就来讲解一下如何通过FFmpeg来解析音频内容,并且用NAudio来进行音频播放;

效果图

虽然效果图是gif并不能

听到音频播放的内容,不过可以从图中看到已经是实现了音频的播放,暂停,停止已经更改进度的内容了;

一。添加NAudio库:

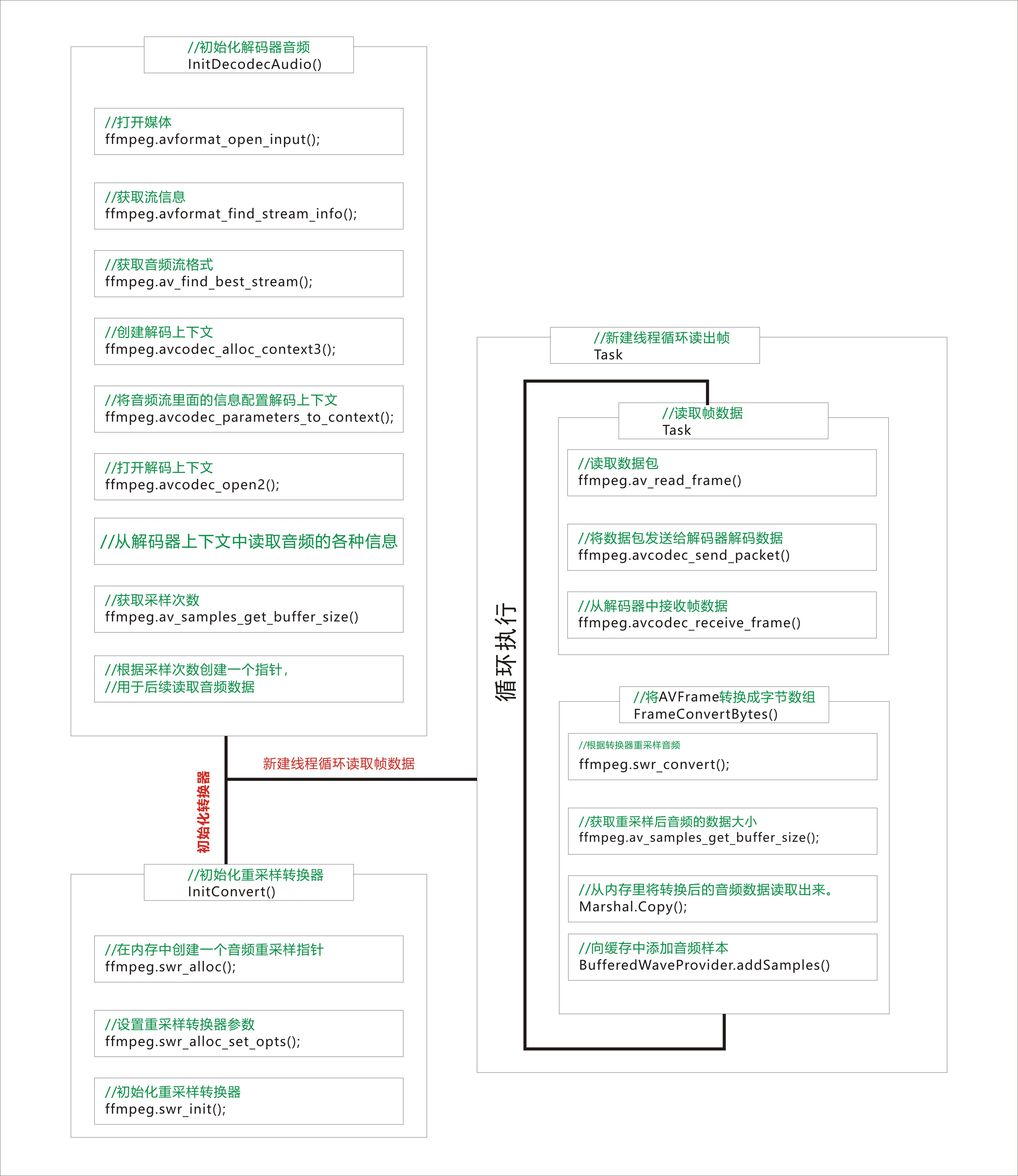

一.音频解码播放流程

可以从流程图中看到音频的解码跟视频的解码是差不多的,只有是重采样跟将帧数据转换成字节数组这两个步骤有区别而已。

1.初始化音频解码

public void InitDecodecAudio(string path) { int error = 0; //创建一个 媒体格式上下文 format = ffmpeg.avformat_alloc_context(); var tempFormat = format; //打开媒体文件 error = ffmpeg.avformat_open_input(&tempFormat, path, null, null); if (error < 0) { Debug.WriteLine("打开媒体文件失败"); return; } //嗅探媒体信息 ffmpeg.avformat_find_stream_info(format, null); AVCodec* codec; //获取音频流索引 audioStreamIndex = ffmpeg.av_find_best_stream(format, AVMediaType.AVMEDIA_TYPE_AUDIO, -1, -1, &codec, 0); if (audioStreamIndex < 0) { Debug.WriteLine("没有找到音频流"); return; } //获取音频流 audioStream = format->streams[audioStreamIndex]; //创建解码上下文 codecContext = ffmpeg.avcodec_alloc_context3(codec); //将音频流里面的解码器参数设置到 解码器上下文中 error = ffmpeg.avcodec_parameters_to_context(codecContext, audioStream->codecpar); if (error < 0) { Debug.WriteLine("设置解码器参数失败"); } error = ffmpeg.avcodec_open2(codecContext, codec, null); //媒体时长 Duration = TimeSpan.FromMilliseconds(format->duration / 1000); //编解码id CodecId = codec->id.ToString(); //解码器名字 CodecName = ffmpeg.avcodec_get_name(codec->id); //比特率 Bitrate = codecContext->bit_rate; //音频通道数 Channels = codecContext->channels; //通道布局类型 ChannelLyaout = codecContext->channel_layout; //音频采样率 SampleRate = codecContext->sample_rate; //音频采样格式 SampleFormat = codecContext->sample_fmt; //采样次数 //获取给定音频参数所需的缓冲区大小。 BitsPerSample = ffmpeg.av_samples_get_buffer_size(null, 2, codecContext->frame_size, AVSampleFormat.AV_SAMPLE_FMT_S16, 1); //创建一个指针 audioBuffer = Marshal.AllocHGlobal((int)BitsPerSample); bufferPtr = (byte*)audioBuffer; //初始化音频重采样转换器 InitConvert((int)ChannelLyaout, AVSampleFormat.AV_SAMPLE_FMT_S16, (int)SampleRate, (int)ChannelLyaout, SampleFormat, (int)SampleRate); //创建一个包和帧指针 packet = ffmpeg.av_packet_alloc(); frame = ffmpeg.av_frame_alloc(); State = MediaState.Read; }

InitDecodecAudio

在初始化各个结构的代码其实是跟解码视频的流程差不多的,只是获取媒体流的类型从视频类型更改成了音频类型,和媒体的信息从视频改为了音频信息,并且获取了音频的采样次数和创建了一个用于后续读取音频数据的指针。

2.初始化音频重采样转换器。

/// <summary> /// 初始化重采样转换器 /// </summary> /// <param name="occ">输出的通道类型</param> /// <param name="osf">输出的采样格式</param> /// <param name="osr">输出的采样率</param> /// <param name="icc">输入的通道类型</param> /// <param name="isf">输入的采样格式</param> /// <param name="isr">输入的采样率</param> /// <returns></returns> bool InitConvert(int occ, AVSampleFormat osf, int osr, int icc, AVSampleFormat isf, int isr) { //创建一个重采样转换器 convert = ffmpeg.swr_alloc(); //设置重采样转换器参数 convert = ffmpeg.swr_alloc_set_opts(convert, occ, osf, osr, icc, isf, isr, 0, null); if (convert == null) return false; //初始化重采样转换器 ffmpeg.swr_init(convert); return true; }

InitConvert

根据输入参数初始化了一个 SwrContext结构的音频转换器,跟 视频的SwsContext结构是不同的。

3。从音频帧读取数据。

public byte[] FrameConvertBytes(AVFrame* sourceFrame) { var tempBufferPtr = bufferPtr; //重采样音频 var outputSamplesPerChannel = ffmpeg.swr_convert(convert, &tempBufferPtr, frame->nb_samples, sourceFrame->extended_data, sourceFrame->nb_samples); //获取重采样后的音频数据大小 var outPutBufferLength = ffmpeg.av_samples_get_buffer_size(null, 2, outputSamplesPerChannel, AVSampleFormat.AV_SAMPLE_FMT_S16, 1); if (outputSamplesPerChannel < 0) return null; byte[] bytes = new byte[outPutBufferLength]; //从内存中读取转换后的音频数据 Marshal.Copy(audioBuffer, bytes, 0, bytes.Length); return bytes; }

FrameConvertBytes

调用ffmpeg.swr_convert()将音频帧通过重采样后音频的数据大小会发生改变的,需要再次调用 ffmpeg.av_samples_get_buffer_size() 来重新计算 音频数据大小,并通过指针位置获取数据;

4.声明音频播放组件

//NAudio音频播放组件 private WaveOut waveOut; private BufferedWaveProvider bufferedWaveProvider;

5.在线程任务上循环读取音频帧解码成字节数组并 向bufferedAveProvider 添加音频样本,当添加的音频数据大于预设的数据量则将缓存内的数据都清除掉。

PlayTask = new Task(() => { while (true) { //播放中 if (audio.IsPlaying) { //获取下一帧视频 if (audio.TryReadNextFrame(out var frame)) { var bytes = audio.FrameConvertBytes(&frame); if (bytes == null) continue; if (bufferedWaveProvider.BufferLength <= bufferedWaveProvider.BufferedBytes+bytes.Length) { bufferedWaveProvider.ClearBuffer(); } bufferedWaveProvider.AddSamples(bytes, 0, bytes.Length);//向缓存中添加音频样本 } } } }); PlayTask.Start();

PlayTask

二.音频读取解码整个流程 DecodecAudio 类

public unsafe class DecodecAudio : IMedia { //媒体格式容器 AVFormatContext* format; //解码上下文 AVCodecContext* codecContext; AVStream* audioStream; //媒体数据包 AVPacket* packet; AVFrame* frame; SwrContext* convert; int audioStreamIndex; bool isNextFrame = true; //播放上一帧的时间 TimeSpan lastTime; TimeSpan OffsetClock; object SyncLock = new object(); Stopwatch clock = new Stopwatch(); bool isNexFrame = true; public event IMedia.MediaHandler MediaCompleted; //是否是正在播放中 public bool IsPlaying { get; protected set; } /// <summary> /// 媒体状态 /// </summary> public MediaState State { get; protected set; } /// <summary> /// 帧播放时长 /// </summary> public TimeSpan frameDuration { get; protected set; } /// <summary> /// 媒体时长 /// </summary> public TimeSpan Duration { get; protected set; } /// <summary> /// 播放位置 /// </summary> public TimeSpan Position { get => OffsetClock + clock.Elapsed; } /// <summary> /// 解码器名字 /// </summary> public string CodecName { get; protected set; } /// <summary> /// 解码器Id /// </summary> public string CodecId { get; protected set; } /// <summary> /// 比特率 /// </summary> public long Bitrate { get; protected set; } //通道数 public int Channels { get; protected set; } //采样率 public long SampleRate { get; protected set; } //采样次数 public long BitsPerSample { get; protected set; } //通道布局 public ulong ChannelLyaout { get; protected set; } /// <summary> /// 采样格式 /// </summary> public AVSampleFormat SampleFormat { get; protected set; } public void InitDecodecAudio(string path) { int error = 0; //创建一个 媒体格式上下文 format = ffmpeg.avformat_alloc_context(); var tempFormat = format; //打开媒体文件 error = ffmpeg.avformat_open_input(&tempFormat, path, null, null); if (error < 0) { Debug.WriteLine("打开媒体文件失败"); return; } //嗅探媒体信息 ffmpeg.avformat_find_stream_info(format, null); AVCodec* codec; //获取音频流索引 audioStreamIndex = ffmpeg.av_find_best_stream(format, AVMediaType.AVMEDIA_TYPE_AUDIO, -1, -1, &codec, 0); if (audioStreamIndex < 0) { Debug.WriteLine("没有找到音频流"); return; } //获取音频流 audioStream = format->streams[audioStreamIndex]; //创建解码上下文 codecContext = ffmpeg.avcodec_alloc_context3(codec); //将音频流里面的解码器参数设置到 解码器上下文中 error = ffmpeg.avcodec_parameters_to_context(codecContext, audioStream->codecpar); if (error < 0) { Debug.WriteLine("设置解码器参数失败"); } error = ffmpeg.avcodec_open2(codecContext, codec, null); //媒体时长 Duration = TimeSpan.FromMilliseconds(format->duration / 1000); //编解码id CodecId = codec->id.ToString(); //解码器名字 CodecName = ffmpeg.avcodec_get_name(codec->id); //比特率 Bitrate = codecContext->bit_rate; //音频通道数 Channels = codecContext->channels; //通道布局类型 ChannelLyaout = codecContext->channel_layout; //音频采样率 SampleRate = codecContext->sample_rate; //音频采样格式 SampleFormat = codecContext->sample_fmt; //采样次数 //获取给定音频参数所需的缓冲区大小。 BitsPerSample = ffmpeg.av_samples_get_buffer_size(null, 2, codecContext->frame_size, AVSampleFormat.AV_SAMPLE_FMT_S16, 1); //创建一个指针 audioBuffer = Marshal.AllocHGlobal((int)BitsPerSample); bufferPtr = (byte*)audioBuffer; //初始化音频重采样转换器 InitConvert((int)ChannelLyaout, AVSampleFormat.AV_SAMPLE_FMT_S16, (int)SampleRate, (int)ChannelLyaout, SampleFormat, (int)SampleRate); //创建一个包和帧指针 packet = ffmpeg.av_packet_alloc(); frame = ffmpeg.av_frame_alloc(); State = MediaState.Read; } //缓冲区指针 IntPtr audioBuffer; //缓冲区句柄 byte* bufferPtr; /// <summary> /// 初始化重采样转换器 /// </summary> /// <param name="occ">输出的通道类型</param> /// <param name="osf">输出的采样格式</param> /// <param name="osr">输出的采样率</param> /// <param name="icc">输入的通道类型</param> /// <param name="isf">输入的采样格式</param> /// <param name="isr">输入的采样率</param> /// <returns></returns> bool InitConvert(int occ, AVSampleFormat osf, int osr, int icc, AVSampleFormat isf, int isr) { //创建一个重采样转换器 convert = ffmpeg.swr_alloc(); //设置重采样转换器参数 convert = ffmpeg.swr_alloc_set_opts(convert, occ, osf, osr, icc, isf, isr, 0, null); if (convert == null) return false; //初始化重采样转换器 ffmpeg.swr_init(convert); return true; } /// <summary> /// 尝试读取下一帧 /// </summary> /// <param name="outFrame"></param> /// <returns></returns> public bool TryReadNextFrame(out AVFrame outFrame) { if (lastTime == TimeSpan.Zero) { lastTime = Position; isNextFrame = true; } else { if (Position - lastTime >= frameDuration) { lastTime = Position; isNextFrame = true; } else { outFrame = *frame; return false; } } if (isNextFrame) { lock (SyncLock) { int result = -1; //清理上一帧的数据 ffmpeg.av_frame_unref(frame); while (true) { //清理上一帧的数据包 ffmpeg.av_packet_unref(packet); //读取下一帧,返回一个int 查看读取数据包的状态 result = ffmpeg.av_read_frame(format, packet); //读取了最后一帧了,没有数据了,退出读取帧 if (result == ffmpeg.AVERROR_EOF || result < 0) { outFrame = *frame; StopPlay(); return false; } //判断读取的帧数据是否是视频数据,不是则继续读取 if (packet->stream_index != audioStreamIndex) continue; //将包数据发送给解码器解码 ffmpeg.avcodec_send_packet(codecContext, packet); //从解码器中接收解码后的帧 result = ffmpeg.avcodec_receive_frame(codecContext, frame); if (result < 0) continue; //计算当前帧播放的时长 frameDuration = TimeSpan.FromTicks((long)Math.Round(TimeSpan.TicksPerMillisecond * 1000d * frame->nb_samples / frame->sample_rate, 0)); outFrame = *frame; return true; } } } else { outFrame = *frame; return false; } } void StopPlay() { lock (SyncLock) { if (State == MediaState.None) return; IsPlaying = false; OffsetClock = TimeSpan.FromSeconds(0); clock.Reset(); clock.Stop(); var tempFormat = format; ffmpeg.avformat_free_context(tempFormat); format = null; var tempCodecContext = codecContext; ffmpeg.avcodec_free_context(&tempCodecContext); var tempPacket = packet; ffmpeg.av_packet_free(&tempPacket); var tempFrame = frame; ffmpeg.av_frame_free(&tempFrame); var tempConvert = convert; ffmpeg.swr_free(&tempConvert); Marshal.FreeHGlobal(audioBuffer); bufferPtr = null; audioStream = null; audioStreamIndex = -1; //视频时长 Duration = TimeSpan.FromMilliseconds(0); //编解码器名字 CodecName = String.Empty; CodecId = String.Empty; //比特率 Bitrate = 0; //帧率 Channels = 0; ChannelLyaout = 0; SampleRate = 0; BitsPerSample = 0; State = MediaState.None; lastTime = TimeSpan.Zero; MediaCompleted?.Invoke(Duration); } } /// <summary> /// 更改进度 /// </summary> /// <param name="seekTime">更改到的位置(秒)</param> public void SeekProgress(int seekTime) { if (format == null || audioStreamIndex == null) return; lock (SyncLock) { IsPlaying = false;//将视频暂停播放 clock.Stop(); //将秒数转换成视频的时间戳 var timestamp = seekTime / ffmpeg.av_q2d(audioStream->time_base); //将媒体容器里面的指定流(视频)的时间戳设置到指定的位置,并指定跳转的方法; ffmpeg.av_seek_frame(format, audioStreamIndex, (long)timestamp, ffmpeg.AVSEEK_FLAG_BACKWARD | ffmpeg.AVSEEK_FLAG_FRAME); ffmpeg.av_frame_unref(frame);//清除上一帧的数据 ffmpeg.av_packet_unref(packet); //清除上一帧的数据包 int error = 0; //循环获取帧数据,判断获取的帧时间戳已经大于给定的时间戳则说明已经到达了指定的位置则退出循环 while (packet->pts < timestamp) { do { do { ffmpeg.av_packet_unref(packet);//清除上一帧数据包 error = ffmpeg.av_read_frame(format, packet);//读取数据 if (error == ffmpeg.AVERROR_EOF)//是否是到达了视频的结束位置 return; } while (packet->stream_index != audioStreamIndex);//判断当前获取的数据是否是视频数据 ffmpeg.avcodec_send_packet(codecContext, packet);//将数据包发送给解码器解码 error = ffmpeg.avcodec_receive_frame(codecContext, frame);//从解码器获取解码后的帧数据 } while (error == ffmpeg.AVERROR(ffmpeg.EAGAIN)); } OffsetClock = TimeSpan.FromSeconds(seekTime);//设置时间偏移 clock.Restart();//时钟从新开始 IsPlaying = true;//视频开始播放 lastTime = TimeSpan.Zero; } } /// <summary> /// 将音频帧转换成字节数组 /// </summary> /// <param name="sourceFrame"></param> /// <returns></returns> public byte[] FrameConvertBytes(AVFrame* sourceFrame) { var tempBufferPtr = bufferPtr; //重采样音频 var outputSamplesPerChannel = ffmpeg.swr_convert(convert, &tempBufferPtr, frame->nb_samples, sourceFrame->extended_data, sourceFrame->nb_samples); //获取重采样后的音频数据大小 var outPutBufferLength = ffmpeg.av_samples_get_buffer_size(null, 2, outputSamplesPerChannel, AVSampleFormat.AV_SAMPLE_FMT_S16, 1); if (outputSamplesPerChannel < 0) return null; byte[] bytes = new byte[outPutBufferLength]; //从内存中读取转换后的音频数据 Marshal.Copy(audioBuffer, bytes, 0, bytes.Length); return bytes; } public void Play() { if (State == MediaState.Play) return; clock.Start(); IsPlaying = true; State = MediaState.Play; } public void Pause() { if (State != MediaState.Play) return; IsPlaying = false; OffsetClock = clock.Elapsed; clock.Stop(); clock.Reset(); State = MediaState.Pause; } public void Stop() { if (State == MediaState.None) return; StopPlay(); } }

DecodecAudio

三.解码布局,和后台代码

<Grid> <Grid.Resources> <Style TargetType="TextBlock" x:Key="Key"> <Setter Property="control:DockPanel.Dock" Value="Left" /> <Setter Property="HorizontalAlignment" Value="Left" /> <Setter Property="FontWeight" Value="Bold" /> <Setter Property="FontSize" Value="15"></Setter> <Setter Property="Foreground" Value="White"></Setter> <Setter Property="Width" Value="150" /> </Style> <Style TargetType="TextBlock" x:Key="Value"> <Setter Property="control:DockPanel.Dock" Value="Right" /> <Setter Property="HorizontalAlignment" Value="Stretch" /> <Setter Property="FontWeight" Value="Normal" /> <Setter Property="FontSize" Value="15"></Setter> <Setter Property="Foreground" Value="White"></Setter> </Style> </Grid.Resources> <Grid.RowDefinitions> <RowDefinition></RowDefinition> <RowDefinition Height="auto"></RowDefinition> </Grid.RowDefinitions> <Grid.ColumnDefinitions> <ColumnDefinition ></ColumnDefinition> <ColumnDefinition Width="auto"></ColumnDefinition> </Grid.ColumnDefinitions> <canvas:CanvasControl x:Name="canvas"></canvas:CanvasControl> <StackPanel Background="Black" Grid.Column="1" Width="300"> <control:DockPanel> <TextBlock Text="Duration" Style="{StaticResource Key}"></TextBlock> <TextBlock x:Name="dura" Text="00:00:00" Style="{StaticResource Value}"></TextBlock> </control:DockPanel> <control:DockPanel> <TextBlock Text="Position" Style="{StaticResource Key}"></TextBlock> <TextBlock x:Name="position" Text="00:00:00" Style="{StaticResource Value}"></TextBlock> </control:DockPanel> <control:DockPanel Background="LightBlue"> <TextBlock Style="{StaticResource Key}">Has Video</TextBlock> <TextBlock Style="{StaticResource Value}" /> </control:DockPanel> <control:DockPanel > <TextBlock Style="{StaticResource Key}" Text="Audi Codec"></TextBlock> <TextBlock Style="{StaticResource Value}" x:Name="audioCodec" /> </control:DockPanel> <control:DockPanel > <TextBlock Style="{StaticResource Key}" Text="Audio Bitrate"></TextBlock> <TextBlock Style="{StaticResource Value}" x:Name="audioBitrate" /> </control:DockPanel> <control:DockPanel > <TextBlock Style="{StaticResource Key}" Text="Audio Channels"></TextBlock> <TextBlock Style="{StaticResource Value}" x:Name="audioChannels"/> </control:DockPanel> <control:DockPanel > <TextBlock Style="{StaticResource Key}" Text="Audio ChannelsLayout"></TextBlock> <TextBlock Style="{StaticResource Value}" x:Name="audioChannelsLayout"/> </control:DockPanel> <control:DockPanel > <TextBlock Style="{StaticResource Key}" Text="Audio SampleRate"></TextBlock> <TextBlock Style="{StaticResource Value}" x:Name="audioSampleRate" /> </control:DockPanel> <control:DockPanel > <TextBlock Style="{StaticResource Key}" Text="Audio BitsPerSample"></TextBlock> <TextBlock Style="{StaticResource Value}" x:Name="audioBitsPerSample" /> </control:DockPanel> </StackPanel> <StackPanel Grid.Row="1" Grid.ColumnSpan="2"> <Grid> <Grid.ColumnDefinitions> <ColumnDefinition Width="auto"></ColumnDefinition> <ColumnDefinition ></ColumnDefinition> <ColumnDefinition Width="auto"></ColumnDefinition> </Grid.ColumnDefinitions> <TextBlock Text="{Binding ElementName=position,Path=Text,Mode=OneWay}"></TextBlock> <Slider Grid.Column="1" x:Name="progress"></Slider> <TextBlock Grid.Column="2" Text="00:00:00" x:Name="duration"></TextBlock> </Grid> <TextBox x:Name="pathBox" Text="C:UsersludinDesktop新建文件夹 (4)2.mp3" PlaceholderText="地址输入"></TextBox> <StackPanel Grid.Row="1" Orientation="Horizontal" HorizontalAlignment="Center" VerticalAlignment="Center"> <Button x:Name="play" >播放</Button> <Button x:Name="pause" >暂停</Button> <Button x:Name="stop" >停止</Button> </StackPanel> </StackPanel> </Grid>

Xaml

Task PlayTask; CanvasBitmap bitmap; DispatcherTimer timer = new DispatcherTimer(); bool progressActivity = false; DecodecAudio audio = new DecodecAudio(); //NAudio音频播放组件 private WaveOut waveOut; private BufferedWaveProvider bufferedWaveProvider; public FFmpegDecodecAudio() { this.InitializeComponent(); Init(); InitUi(); } void Init() { waveOut = new WaveOut(); bufferedWaveProvider = new BufferedWaveProvider(new WaveFormat()); waveOut.Init(bufferedWaveProvider); waveOut.Play(); //播放 play.Click += (s, e) => { if (audio.State == MediaState.None) { //初始化解码视频 audio.InitDecodecAudio(pathBox.Text); DisplayVideoInfo(); } audio.Play(); timer.Start(); }; //暂停 pause.Click += (s, e) => audio.Pause(); stop.Click += (s, e) => audio.Stop(); ; PlayTask = new Task(() => { while (true) { //播放中 if (audio.IsPlaying) { //获取下一帧视频 if (audio.TryReadNextFrame(out var frame)) { var bytes = audio.FrameConvertBytes(&frame); if (bytes == null) continue; if (bufferedWaveProvider.BufferLength <= bufferedWaveProvider.BufferedBytes+bytes.Length) { bufferedWaveProvider.ClearBuffer(); } bufferedWaveProvider.AddSamples(bytes, 0, bytes.Length);//向缓存中添加音频样本 } } } }); PlayTask.Start(); audio.MediaCompleted += (s) => { DispatcherQueue.TryEnqueue(Microsoft.UI.Dispatching.DispatcherQueuePriority.Normal, () => { timer.Stop(); progressActivity = false; DisplayVideoInfo(); }); }; } void InitUi() { //画布绘制 canvas.Draw += (s, e) => { if (bitmap != null) { var te = Win2DUlit.CalcutateImageCenteredTransform(canvas.ActualSize, bitmap.Size); te.Source = bitmap; e.DrawingSession.DrawImage(te); } }; timer.Interval = TimeSpan.FromMilliseconds(300); //计时器更新进度条 timer.Tick += (s, e) => { if (!audio.IsPlaying) return; position.Text = audio.Position.ToString(); progressActivity = false; progress.Value = audio.Position.TotalSeconds; progressActivity = true; }; //进度条更改 progress.ValueChanged += (s, e) => { if (!audio.IsPlaying) return; if (progressActivity == true) { audio.SeekProgress((int)e.NewValue); } }; } /// <summary> /// 显示视频信息 /// </summary> void DisplayVideoInfo() { dura.Text = audio.Duration.ToString(); audioCodec.Text = audio.CodecName; audioBitrate.Text = audio.Bitrate.ToString(); audioChannels.Text = audio.Channels.ToString(); audioChannelsLayout .Text = audio.ChannelLyaout.ToString(); audioSampleRate.Text = audio.SampleRate.ToString(); audioBitsPerSample.Text = audio.BitsPerSample.ToString(); position.Text = audio.Position.ToString(); progress.Maximum = audio.Duration.TotalSeconds; }

View Code

四.结语

在整个解码和播放音频的过程中跟视频解码播放大致上是一样的,只有是重采样和读取数据的方式不一样而已,通过上面的代码就可以简单的实现音频播放,暂停,停止和更改进度的功能了

感兴趣的朋友可以到项目demo上了解运行情况。